There’s a number of reasons a webmaster might want to markup structured content in a way that can render a data-type more clearly to search engines. Whilst this doesn’t have a causal effect on rank improvements, that more of your content can be “understood” more clearly, must be a positive right? Whilst there is some concern that marking-up your page content with Microdata, Microformats or RDFa may in some cases permit search engines to know enough about your page content and comparative data elements to bypass your page entirely; serving comparative results in-SERP, in most cases this seems somewhat alarmist when there are significant benefits to be had. This isn’t a how-to post, as I wanted to focus more on quantifying the CTR benefits and also the difficulties inherent in trying to do so.

That said, if you are new to why and how to mark-up structured data, there’s plenty of resources available. Here’s an overview introduction from Google, which includes a video; then there’s Schema.org which is a single vocabulary syntax that all search engines support, meaning that going forward implementing Schema will render your rich snippets in search results for all the major search engines. (Prior to that there was some differentiation from engine to engine as to which format would render.) In addition, there’s a shed-load of walk-thru’s and implementation tips on SEO Gadget.

Microformats & CTR

That successfully implementing microformats that render in your search snippets increases CTR is largely common sense. It’s pretty much innate knowledge that anyone with any experience in SEO understands; make your search result more relevant and attractive versus in-SERP competition and you’re bound to attract more clicks. Making a solid case to a client however, can be very difficult based on experience and anecdotal references, particularly when working with large e-commerce sites with heavy development schedules and a hundred and one other things to work on. Sure visits may go up, post implementation but then there’s so many additional factors that contribute to visits and CTR increase it can be very difficult to quantify success at a granular level. There are some case studies around (some good examples collated here), but many of them are from enormous online retailers, sharing macro data (overall increase in search share). Finding tangible term-level data is almost impossible, probably least of all because when it comes to measuring CTR the only real primary data source is Webmaster Tools, which for obvious reasons doesn’t give exact values.

Whinging aside, we recently tried to quantify CTR uplift post implementation of hReview and thought it might be worth sharing for anyone that needs persuading. First off, let’s define CTR and and factors known to effect.

Click-through Rate

The rate at which searchers click your result when it appears in search results (a search impression), expressed as a percentage. So if my page is seen in the search results 5000 times in a month, and receives 100 clicks 100/5000X100 = 2%.

Many factors can positively or negatively impact CTR most clearly obvious being aggregate rank, or we wouldn’t have jobs right? In addition the relative attractiveness of your listing can be improved by optimising meta-title and description should that be the data the search engine is displaying in the listing. Then there are factors outside our control, such as brand recognition and trust of our listing and that of proximal competitors.

Step One

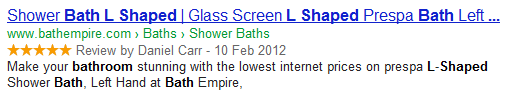

We implemented Thing>Creative Work>Review Schema on product level pages on an online bathroom retailer website, which showed up on all pages by December 25th. OOOOh, shiny!

Step Two

We took an export of the search query data for the month preceding and up to December 25th, and here’s where it gets tricky. First off, we all know that Webmaster Tools Data is inexact. Impression data is clearly rounded (off, up, down, sideways – who knows?) Then of course to calculate CTR as in the example above, you need a significant (relatively) volume of queries on a term in order to feature with enough impression data, to then get enough clicks, to then have a CTR value displayed. Most of the time (and dependent on query/sector) you need to be ranking on average around page one or top of two, to get enough data.

This is where is gets tricky with microdata, reason being you’re implementing such on product or detail level pages triggered by mid to long-tail queries. This meant that in our case, though we implemented on around a thousand product level pages, only a few hundred were at the impression level to generate enough reliable (or rather comparable) data. Surprising there aren’t thousands of people searching for ceramic disc valves. Whodathunk?

Step Three

A full month post-implementation we took another export of data, compared all terms with the required data to the previous month and then of course, there’s another thing to rule out. A large amount of these had increased in “Average Position” (which is a relief, given it’s our job), however this meant these terms had to be discounted; as you can’t claim a CTR victory for microdata when there’s an increase in “Average Position”. Of course “Average Position” is in itself nice and vague, as this takes into account the average of the position(s) in which your result may appear.

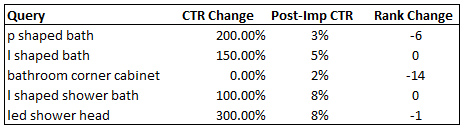

Once we had stripped out all the rule-outs, we were left with only five terms that has stayed the same or decreased in average position month on month.

*Post Imp CTR = post implementation CTR and takes the month average CTR for the month after December 25th.

Results

So though there’s just a handful of results that still qualify for any meaningful month on month comparison the actual percentage increase in each case is quite substantial. Even on the term that decreased in position by 14 positions, CTR stayed at 2%, which is pretty darn strong. It’s worth pointing out that their primary listing for this term stayed in the same page one position, but a second result for the query fell, dragging down the average.)

Other Vagueries

In addition to the data constraints and additional factors affecting CTR there’s also seasonal impact to consider. Whilst seasonality (particularly when comparing December to January) will almost certainly impact intent-to-purchase, it shouldn’t impact too significantly on intent-to-research and therefore positively or negatively impact CTR too significantly.

In conclusion, all we can say is that this is imperfect research, with a handful of qualifying test-case terms; however CTR uplift on said handful of terms is extremely significant when other main driver of CTR are the same or decreased. Better than a poke in the eye with a shitty stick.